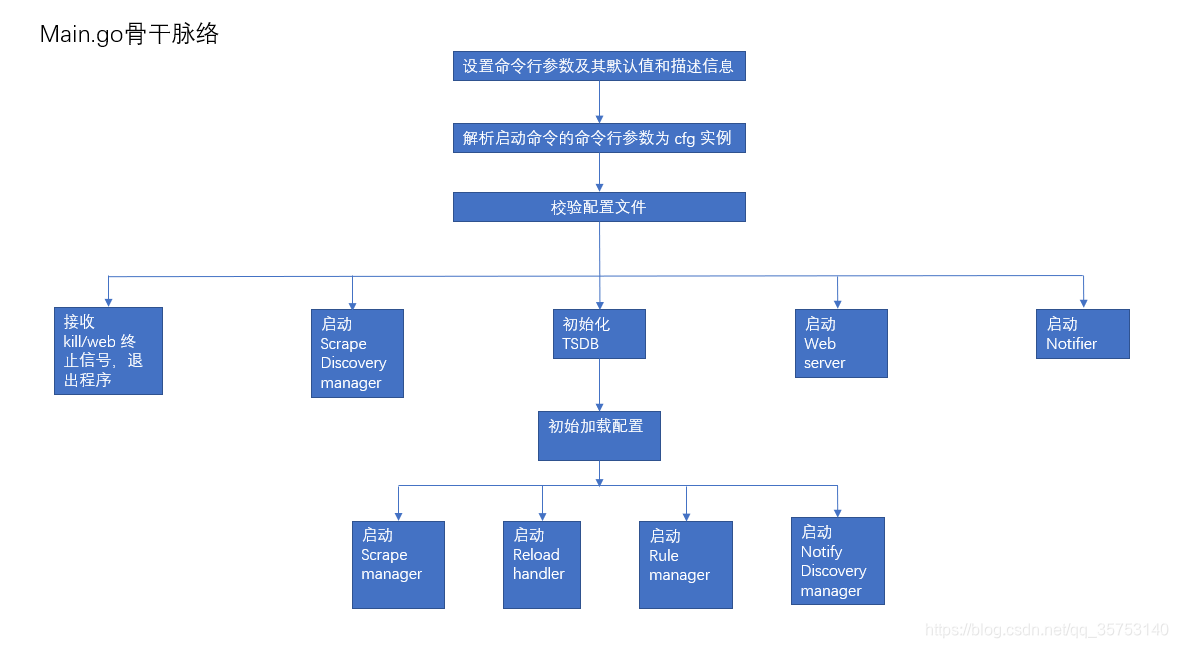

主要工作流程

- 设置命令行参数及其默认值和描述信息

- 解析启动命令的命令行参数为 cfg 实例

- 校验配置文件(–config.file 设置),默认是 prometheus.yml

- 打印 “Starting Prometheus” 和主机系统信息日志

- 初始化子任务对象

- 并发启动各个子任务

在单独的 goroutine 中执行的子任务

- 接收 kill/web 终止信号,退出程序

- 启动Scrape Discovery manager

- 启动 Notify Discovery manager

- 启动 Scrape manager

- 启动 Reload handler

- 初始加载配置

- 启动 Rule manager

- 初始化TSDB

- 启动 Web server

- 启动 Notifier

值得注意的点

-

使用 sync.Once() 通过 channel 向其他并发任务发信号,通知它们配置加载已完成,需要被告知,也就是在配置加载完成后启动的子任务包括

- scrape manager

- reload handler

- rule manager

- notifier manager

-

DB 初始化完成后才能启动初始加载配置文件子任务

-

使用 https://github.com/oklog/run 的

Group并发启动各个子任务,其功能与 golang.org/x/sync/errgroup 相近,有一个子任务返回就触发所有任务退出。主要有两个方法Add()函数添加子任务,两个参数,第一个是子任务的执行函数,第二个是子任务中断时执行的函数Run()启动 goroutine 并发执行子任务

-

重加载配置的时机

- 收到 SIGHUP 信号

- web api 收到 reload 请求

- 第一次启动程序

-

readyStorage 包装了 tsdb,提供如下方法以实现 Storage 接口

Set设置 tsdb 和 startTimeMarginStartTime返回当前时间 + startTimeMarginQuerierChunkQuerierAppenderClose

此外还提供了一些方法实现

api_v1.TSDBAdminStats和api_v2.TSDBAdmin接口

var (

// 声明两个程序自身的监控指标

configSuccess = prometheus.NewGauge(prometheus.GaugeOpts{

Name: "prometheus_config_last_reload_successful",

Help: "Whether the last configuration reload attempt was successful.",

})

configSuccessTime = prometheus.NewGauge(prometheus.GaugeOpts{

Name: "prometheus_config_last_reload_success_timestamp_seconds",

Help: "Timestamp of the last successful configuration reload.",

})

// 默认数据保留时间 15 天

defaultRetentionString = "15d"

defaultRetentionDuration model.Duration

)

func init() {

prometheus.MustRegister(version.NewCollector("prometheus"))

// 将默认数据保留时间从字符串转换为 model.Duration 类型,

// 其底层是 time.Duration 类型。

var err error

// model.ParseDuration 会对 defaultRetentionString 进行正则匹配,提取

// 各个单位的数值然后计算总的毫秒数

// 例如 "1y2w3d4h5m6s7ms" 就 解析成 [1y2w3d4h5m6s7ms 1y 1 2w 2 3d 3 4h 4 5m 5 6s 6 7ms 7]

// 然后计算乘积

defaultRetentionDuration, err = model.ParseDuration(defaultRetentionString)

if err != nil {

panic(err)

}

}

func main() {

if os.Getenv("DEBUG") != "" {

runtime.SetBlockProfileRate(20)

runtime.SetMutexProfileFraction(20)

}

var (

oldFlagRetentionDuration model.Duration

newFlagRetentionDuration model.Duration

)

// 启动配置项

cfg := struct {

configFile string

localStoragePath string

notifier notifier.Options

notifierTimeout model.Duration

forGracePeriod model.Duration

outageTolerance model.Duration

resendDelay model.Duration

web web.Options

tsdb tsdbOptions

lookbackDelta model.Duration

webTimeout model.Duration

queryTimeout model.Duration

queryConcurrency int

queryMaxSamples int

RemoteFlushDeadline model.Duration

prometheusURL string

corsRegexString string

promlogConfig promlog.Config

}{

notifier: notifier.Options{

// 默认注册器注册 cpu 和 go 指标收集器

Registerer: prometheus.DefaultRegisterer,

},

web: web.Options{

Registerer: prometheus.DefaultRegisterer,

Gatherer: prometheus.DefaultGatherer,

},

// 初始化 go kit logger

promlogConfig: promlog.Config{},

}

// kingpin 解析 Args[0],即 prometheus,初始化 kingpin.Application

a := kingpin.New(filepath.Base(os.Args[0]), "The Prometheus monitoring server")

// 设置一些标志位参数及其默认值

a.Version(version.Print("prometheus"))

a.HelpFlag.Short('h')

a.Flag("config.file", "Prometheus configuration file path.").

Default("prometheus.yml").StringVar(&cfg.configFile)

a.Flag("web.listen-address", "Address to listen on for UI, API, and telemetry.").

Default("0.0.0.0:9090").StringVar(&cfg.web.ListenAddress)

// web.config.file 标志位参数

httpsConfig := httpsflag.AddFlags(a)

// web 读取超时

a.Flag("web.read-timeout",

"Maximum duration before timing out read of the request, and closing idle connections.").

Default("5m").SetValue(&cfg.webTimeout)

// web 的最大连接数

a.Flag("web.max-connections", "Maximum number of simultaneous connections.").

Default("512").IntVar(&cfg.web.MaxConnections)

a.Flag("web.external-url",

"The URL under which Prometheus is externally reachable (for example, if Prometheus is served via a reverse proxy). Used for generating relative and absolute links back to Prometheus itself. If the URL has a path portion, it will be used to prefix all HTTP endpoints served by Prometheus. If omitted, relevant URL components will be derived automatically.").

PlaceHolder("<URL>").StringVar(&cfg.prometheusURL)

a.Flag("web.route-prefix",

"Prefix for the internal routes of web endpoints. Defaults to path of --web.external-url.").

PlaceHolder("<path>").StringVar(&cfg.web.RoutePrefix)

a.Flag("web.user-assets", "Path to static asset directory, available at /user.").

PlaceHolder("<path>").StringVar(&cfg.web.UserAssetsPath)

// 通过 http 请求热加载配置文件或者关闭服务器

a.Flag("web.enable-lifecycle", "Enable shutdown and reload via HTTP request.").

Default("false").BoolVar(&cfg.web.EnableLifecycle)

a.Flag("web.enable-admin-api", "Enable API endpoints for admin control actions.").

Default("false").BoolVar(&cfg.web.EnableAdminAPI)

a.Flag("web.console.templates", "Path to the console template directory, available at /consoles.").

Default("consoles").StringVar(&cfg.web.ConsoleTemplatesPath)

a.Flag("web.console.libraries", "Path to the console library directory.").

Default("console_libraries").StringVar(&cfg.web.ConsoleLibrariesPath)

a.Flag("web.page-title", "Document title of Prometheus instance.").

Default("Prometheus Time Series Collection and Processing Server").StringVar(&cfg.web.PageTitle)

// 限制访问源

a.Flag("web.cors.origin", `Regex for CORS origin. It is fully anchored. Example: 'https?://(domain1|domain2)\.com'`).

Default(".*").StringVar(&cfg.corsRegexString)

a.Flag("storage.tsdb.path", "Base path for metrics storage.").

Default("data/").StringVar(&cfg.localStoragePath)

// 每两小时将 wal 落盘

a.Flag("storage.tsdb.min-block-duration", "Minimum duration of a data block before being persisted. For use in testing.").

Hidden().Default("2h").SetValue(&cfg.tsdb.MinBlockDuration)

a.Flag("storage.tsdb.max-block-duration",

"Maximum duration compacted blocks may span. For use in testing. (Defaults to 10% of the retention period.)").

Hidden().PlaceHolder("<duration>").SetValue(&cfg.tsdb.MaxBlockDuration)

a.Flag("storage.tsdb.wal-segment-size",

"Size at which to split the tsdb WAL segment files. Example: 100MB").

Hidden().PlaceHolder("<bytes>").BytesVar(&cfg.tsdb.WALSegmentSize)

a.Flag("storage.tsdb.retention", "[DEPRECATED] How long to retain samples in storage. This flag has been deprecated, use \"storage.tsdb.retention.time\" instead.").

SetValue(&oldFlagRetentionDuration)

// 保存的时序数据的长度,默认15天,最多

a.Flag("storage.tsdb.retention.time", "How long to retain samples in storage. When this flag is set it overrides \"storage.tsdb.retention\". If neither this flag nor \"storage.tsdb.retention\" nor \"storage.tsdb.retention.size\" is set, the retention time defaults to "+defaultRetentionString+". Units Supported: y, w, d, h, m, s, ms.").

SetValue(&newFlagRetentionDuration)

a.Flag("storage.tsdb.retention.size", "[EXPERIMENTAL] Maximum number of bytes that can be stored for blocks. A unit is required, supported units: B, KB, MB, GB, TB, PB, EB. Ex: \"512MB\". This flag is experimental and can be changed in future releases.").

BytesVar(&cfg.tsdb.MaxBytes)

// 不在 data 目录创建锁文件

a.Flag("storage.tsdb.no-lockfile", "Do not create lockfile in data directory.").

Default("false").BoolVar(&cfg.tsdb.NoLockfile)

a.Flag("storage.tsdb.allow-overlapping-blocks", "[EXPERIMENTAL] Allow overlapping blocks, which in turn enables vertical compaction and vertical query merge.").

Default("false").BoolVar(&cfg.tsdb.AllowOverlappingBlocks)

// 压缩 wal,默认压缩

a.Flag("storage.tsdb.wal-compression", "Compress the tsdb WAL.").

Default("true").BoolVar(&cfg.tsdb.WALCompression)

// 关闭或者重新加载配置前等待样本远程写落盘的时长,默认1分钟,应该是写 wal 的时间,不确定,远程读写没有接触过

a.Flag("storage.remote.flush-deadline", "How long to wait flushing sample on shutdown or config reload.").

Default("1m").PlaceHolder("<duration>").SetValue(&cfg.RemoteFlushDeadline)

a.Flag("storage.remote.read-sample-limit", "Maximum overall number of samples to return via the remote read interface, in a single query. 0 means no limit. This limit is ignored for streamed response types.").

Default("5e7").IntVar(&cfg.web.RemoteReadSampleLimit)

a.Flag("storage.remote.read-concurrent-limit", "Maximum number of concurrent remote read calls. 0 means no limit.").

Default("10").IntVar(&cfg.web.RemoteReadConcurrencyLimit)

a.Flag("storage.remote.read-max-bytes-in-frame", "Maximum number of bytes in a single frame for streaming remote read response types before marshalling. Note that client might have limit on frame size as well. 1MB as recommended by protobuf by default.").

Default("1048576").IntVar(&cfg.web.RemoteReadBytesInFrame)

// rules 文件允许设置的 for 字段的最大值

a.Flag("rules.alert.for-outage-tolerance", "Max time to tolerate prometheus outage for restoring \"for\" state of alert.").

Default("1h").SetValue(&cfg.outageTolerance)

a.Flag("rules.alert.for-grace-period", "Minimum duration between alert and restored \"for\" state. This is maintained only for alerts with configured \"for\" time greater than grace period.").

Default("10m").SetValue(&cfg.forGracePeriod)

// 间隔多久向 am 重发一次告警信息

a.Flag("rules.alert.resend-delay", "Minimum amount of time to wait before resending an alert to Alertmanager.").

Default("1m").SetValue(&cfg.resendDelay)

a.Flag("scrape.adjust-timestamps", "Adjust scrape timestamps by up to 2ms to align them to the intended schedule. See https://github.com/prometheus/prometheus/issues/7846 for more context. Experimental. This flag will be removed in a future release.").

Hidden().Default("true").BoolVar(&scrape.AlignScrapeTimestamps)

// pending 状态的告警队列长度

a.Flag("alertmanager.notification-queue-capacity", "The capacity of the queue for pending Alertmanager notifications.").

Default("10000").IntVar(&cfg.notifier.QueueCapacity)

// 发送给 am 告警的超时时长

a.Flag("alertmanager.timeout", "Timeout for sending alerts to Alertmanager.").

Default("10s").SetValue(&cfg.notifierTimeout)

a.Flag("query.lookback-delta", "The maximum lookback duration for retrieving metrics during expression evaluations and federation.").

Default("5m").SetValue(&cfg.lookbackDelta)

// 查询表达式超时时长,默认2分钟

a.Flag("query.timeout", "Maximum time a query may take before being aborted.").

Default("2m").SetValue(&cfg.queryTimeout)

// 并发查询的数量,感觉 cpu 和内存资源比较充裕的话可以适当放大

a.Flag("query.max-concurrency", "Maximum number of queries executed concurrently.").

Default("20").IntVar(&cfg.queryConcurrency)

// 一次查询最多加载到内存的样本数,也是最多返回的样本数

a.Flag("query.max-samples", "Maximum number of samples a single query can load into memory. Note that queries will fail if they try to load more samples than this into memory, so this also limits the number of samples a query can return.").

Default("50000000").IntVar(&cfg.queryMaxSamples)

// 添加日志设置的标志位参数,有日志级别([debug, info, warn, error]")和日志格式([logfmt, json])

promlogflag.AddFlags(a, &cfg.promlogConfig)

// 解析、校验参数

_, err := a.Parse(os.Args[1:])

if err != nil {

fmt.Fprintln(os.Stderr, errors.Wrapf(err, "Error parsing commandline arguments"))

a.Usage(os.Args[1:])

os.Exit(2)

}

// 根据配置文件初始化 logger

logger := promlog.New(&cfg.promlogConfig)

// 检验配置参数

cfg.web.ExternalURL, err = computeExternalURL(cfg.prometheusURL, cfg.web.ListenAddress)

if err != nil {

fmt.Fprintln(os.Stderr, errors.Wrapf(err, "parse external URL %q", cfg.prometheusURL))

os.Exit(2)

}

cfg.web.CORSOrigin, err = compileCORSRegexString(cfg.corsRegexString)

if err != nil {

fmt.Fprintln(os.Stderr, errors.Wrapf(err, "could not compile CORS regex string %q", cfg.corsRegexString))

os.Exit(2)

}

// Throw error for invalid config before starting other components.

// 校验命令行参数配置的 prometheus 配置文件是否有效,如果 配置文件(yml)为空就使用默认配置

// 校验后并没有声明 config 对象并把解析结果赋值给它,这里仅仅是解析。

if _, err := config.LoadFile(cfg.configFile); err != nil {

level.Error(logger).Log("msg", fmt.Sprintf("Error loading config (--config.file=%s)", cfg.configFile), "err", err)

os.Exit(2)

}

// Now that the validity of the config is established, set the config

// success metrics accordingly, although the config isn't really loaded

// yet. This will happen later (including setting these metrics again),

// but if we don't do it now, the metrics will stay at zero until the

// startup procedure is complete, which might take long enough to

// trigger alerts about an invalid config.

// 配置文件的有效性已经确立,即便尚未加载配置,将自身的 metrics 里的 prometheus_config_last_reload_successful 设置为1。

// 后面会加载配置并再次设置这个 metric,但是如果现在不做这一步,这个 metric 会一直为 0 直到启动过程完成,

// prometheus_config_last_reload_successful 为 0 的时间也就是启动的时间足够长就可能触发配置无效的告警。

configSuccess.Set(1)

configSuccessTime.SetToCurrentTime()

cfg.web.ReadTimeout = time.Duration(cfg.webTimeout)

// Default -web.route-prefix to path of -web.external-url.

if cfg.web.RoutePrefix == "" {

cfg.web.RoutePrefix = cfg.web.ExternalURL.Path

}

// RoutePrefix must always be at least '/'.

cfg.web.RoutePrefix = "/" + strings.Trim(cfg.web.RoutePrefix, "/")

{ // Time retention settings.

if oldFlagRetentionDuration != 0 {

level.Warn(logger).Log("deprecation_notice", "'storage.tsdb.retention' flag is deprecated use 'storage.tsdb.retention.time' instead.")

cfg.tsdb.RetentionDuration = oldFlagRetentionDuration

}

// When the new flag is set it takes precedence.

if newFlagRetentionDuration != 0 {

cfg.tsdb.RetentionDuration = newFlagRetentionDuration

}

if cfg.tsdb.RetentionDuration == 0 && cfg.tsdb.MaxBytes == 0 {

cfg.tsdb.RetentionDuration = defaultRetentionDuration

level.Info(logger).Log("msg", "No time or size retention was set so using the default time retention", "duration", defaultRetentionDuration)

}

// Check for overflows. This limits our max retention to 100y.

// 如果设置的时序数据保留时长溢出整数,就限制为 100 年。最大可设置的值为292y。

if cfg.tsdb.RetentionDuration < 0 {

y, err := model.ParseDuration("100y")

if err != nil {

panic(err)

}

cfg.tsdb.RetentionDuration = y

level.Warn(logger).Log("msg", "Time retention value is too high. Limiting to: "+y.String())

}

}

{ // Max block size settings.

if cfg.tsdb.MaxBlockDuration == 0 {

maxBlockDuration, err := model.ParseDuration("31d")

if err != nil {

panic(err)

}

// When the time retention is set and not too big use to define the max block duration.

if cfg.tsdb.RetentionDuration != 0 && cfg.tsdb.RetentionDuration/10 < maxBlockDuration {

maxBlockDuration = cfg.tsdb.RetentionDuration / 10

}

cfg.tsdb.MaxBlockDuration = maxBlockDuration

}

}

// 设置默认的指标估值时间间隔

noStepSubqueryInterval := &safePromQLNoStepSubqueryInterval{}

noStepSubqueryInterval.Set(config.DefaultGlobalConfig.EvaluationInterval)

// Above level 6, the k8s client would log bearer tokens in clear-text.

klog.ClampLevel(6)

klog.SetLogger(log.With(logger, "component", "k8s_client_runtime"))

klogv2.ClampLevel(6)

klogv2.SetLogger(log.With(logger, "component", "k8s_client_runtime"))

// 打印启动日志

level.Info(logger).Log("msg", "Starting Prometheus", "version", version.Info())

// 32位系统兼容性提示信息

if bits.UintSize < 64 {

level.Warn(logger).Log("msg", "This Prometheus binary has not been compiled for a 64-bit architecture. Due to virtual memory constraints of 32-bit systems, it is highly recommended to switch to a 64-bit binary of Prometheus.", "GOARCH", runtime.GOARCH)

}

// 打印系统信息日志

level.Info(logger).Log("build_context", version.BuildContext())

level.Info(logger).Log("host_details", prom_runtime.Uname())

level.Info(logger).Log("fd_limits", prom_runtime.FdLimits())

level.Info(logger).Log("vm_limits", prom_runtime.VMLimits())

// 声明和 scraper 和存储相关结构体变量

var (

localStorage = &readyStorage{}

scraper = &readyScrapeManager{}

remoteStorage = remote.NewStorage(log.With(logger, "component", "remote"), prometheus.DefaultRegisterer, localStorage.StartTime, cfg.localStoragePath, time.Duration(cfg.RemoteFlushDeadline), scraper)

fanoutStorage = storage.NewFanout(logger, localStorage, remoteStorage)

)

var (

// cancel web 的 context

ctxWeb, cancelWeb = context.WithCancel(context.Background())

ctxRule = context.Background()

// notifier 是用于向 am 发送告警的

notifierManager = notifier.NewManager(&cfg.notifier, log.With(logger, "component", "notifier"))

// cancel scrape 的 context

ctxScrape, cancelScrape = context.WithCancel(context.Background())

// 声明 discovery manager,将其 context 设置为 scrape 的 context

discoveryManagerScrape = discovery.NewManager(ctxScrape, log.With(logger, "component", "discovery manager scrape"), discovery.Name("scrape"))

// 一共有两个 discovery.Manager 一个是上面的 scrape,一个是下面的 notify

ctxNotify, cancelNotify = context.WithCancel(context.Background())

discoveryManagerNotify = discovery.NewManager(ctxNotify, log.With(logger, "component", "discovery manager notify"), discovery.Name("notify"))

// 声明 scrapeManager,fanout Storage 是一个读写多个底层存储的代理

scrapeManager = scrape.NewManager(log.With(logger, "component", "scrape manager"), fanoutStorage)

// 声明 promql 的引擎配置

opts = promql.EngineOpts{

Logger: log.With(logger, "component", "query engine"),

Reg: prometheus.DefaultRegisterer,

MaxSamples: cfg.queryMaxSamples,

Timeout: time.Duration(cfg.queryTimeout),

ActiveQueryTracker: promql.NewActiveQueryTracker(cfg.localStoragePath, cfg.queryConcurrency, log.With(logger, "component", "activeQueryTracker")),

LookbackDelta: time.Duration(cfg.lookbackDelta),

NoStepSubqueryIntervalFn: noStepSubqueryInterval.Get,

}

// 声明 promql 的 quieryEngine

queryEngine = promql.NewEngine(opts)

// 声明 ruleManager

ruleManager = rules.NewManager(&rules.ManagerOptions{

Appendable: fanoutStorage,

Queryable: localStorage,

QueryFunc: rules.EngineQueryFunc(queryEngine, fanoutStorage),

NotifyFunc: sendAlerts(notifierManager, cfg.web.ExternalURL.String()),

Context: ctxRule,

ExternalURL: cfg.web.ExternalURL,

Registerer: prometheus.DefaultRegisterer,

Logger: log.With(logger, "component", "rule manager"),

OutageTolerance: time.Duration(cfg.outageTolerance),

ForGracePeriod: time.Duration(cfg.forGracePeriod),

ResendDelay: time.Duration(cfg.resendDelay),

})

)

// 设置 scraper 的 scrapeManager 字段值

scraper.Set(scrapeManager)

// 从命令行解析的配置项都赋值给 cfg.web 的配置项

cfg.web.Context = ctxWeb

cfg.web.TSDBRetentionDuration = cfg.tsdb.RetentionDuration

cfg.web.TSDBMaxBytes = cfg.tsdb.MaxBytes

cfg.web.TSDBDir = cfg.localStoragePath

cfg.web.LocalStorage = localStorage

cfg.web.Storage = fanoutStorage

cfg.web.QueryEngine = queryEngine

cfg.web.ScrapeManager = scrapeManager

cfg.web.RuleManager = ruleManager

cfg.web.Notifier = notifierManager

cfg.web.LookbackDelta = time.Duration(cfg.lookbackDelta)

cfg.web.Version = &web.PrometheusVersion{

Version: version.Version,

Revision: version.Revision,

Branch: version.Branch,

BuildUser: version.BuildUser,

BuildDate: version.BuildDate,

GoVersion: version.GoVersion,

}

// 把 Prometheus 启动的配置参数都记录在这个 map 里

cfg.web.Flags = map[string]string{}

// Exclude kingpin default flags to expose only Prometheus ones.

boilerplateFlags := kingpin.New("", "").Version("")

for _, f := range a.Model().Flags {

if boilerplateFlags.GetFlag(f.Name) != nil {

continue

}

cfg.web.Flags[f.Name] = f.Value.String()

}

// Depends on cfg.web.ScrapeManager so needs to be after cfg.web.ScrapeManager = scrapeManager.

webHandler := web.New(log.With(logger, "component", "web"), &cfg.web)

// Monitor outgoing connections on default transport with conntrack.

http.DefaultTransport.(*http.Transport).DialContext = conntrack.NewDialContextFunc(

conntrack.DialWithTracing(),

)

// 声明多个 reloader

reloaders := []reloader{

{

name: "remote_storage",

reloader: remoteStorage.ApplyConfig,

}, {

name: "web_handler",

reloader: webHandler.ApplyConfig,

}, {

name: "query_engine",

reloader: func(cfg *config.Config) error {

if cfg.GlobalConfig.QueryLogFile == "" {

queryEngine.SetQueryLogger(nil)

return nil

}

l, err := logging.NewJSONFileLogger(cfg.GlobalConfig.QueryLogFile)

if err != nil {

return err

}

queryEngine.SetQueryLogger(l)

return nil

},

}, {

// The Scrape and notifier managers need to reload before the Discovery manager as

// they need to read the most updated config when receiving the new targets list.

// scrape 和 notifier manager 要在 discovery manager 之前重新加载,因为它们要在

// 获取新的监控目标之前重新配置。

name: "scrape",

reloader: scrapeManager.ApplyConfig,

}, {

name: "scrape_sd",

reloader: func(cfg *config.Config) error {

c := make(map[string]discovery.Configs)

for _, v := range cfg.ScrapeConfigs {

c[v.JobName] = v.ServiceDiscoveryConfigs

}

return discoveryManagerScrape.ApplyConfig(c)

},

}, {

name: "notify",

reloader: notifierManager.ApplyConfig,

}, {

name: "notify_sd",

reloader: func(cfg *config.Config) error {

c := make(map[string]discovery.Configs)

for k, v := range cfg.AlertingConfig.AlertmanagerConfigs.ToMap() {

c[k] = v.ServiceDiscoveryConfigs

}

return discoveryManagerNotify.ApplyConfig(c)

},

}, {

name: "rules",

reloader: func(cfg *config.Config) error {

// Get all rule files matching the configuration paths.

var files []string

for _, pat := range cfg.RuleFiles {

fs, err := filepath.Glob(pat)

if err != nil {

// The only error can be a bad pattern.

return errors.Wrapf(err, "error retrieving rule files for %s", pat)

}

files = append(files, fs...)

}

return ruleManager.Update(

time.Duration(cfg.GlobalConfig.EvaluationInterval),

files,

cfg.GlobalConfig.ExternalLabels,

)

},

},

}

// 注册自身的 metric

prometheus.MustRegister(configSuccess)

prometheus.MustRegister(configSuccessTime)

// Start all components while we wait for TSDB to open but only load

// initial config and mark ourselves as ready after it completed.

// 在等待开启 TSDB 的时候启动所有的组件,进加载初始配置,全部启动完成后标记为 ready。

// 这个 channel 初始化 DB 完成的信号

dbOpen := make(chan struct{})

// sync.Once is used to make sure we can close the channel at different execution stages(SIGTERM or when the config is loaded).

// sync.Once 用于确保在不同的执行阶段(SIGTERM 或加载完配置)关闭 channel。

type closeOnce struct {

C chan struct{}

once sync.Once

Close func()

}

// Wait until the server is ready to handle reloading.

// 等待直到 server 准备好处理配置重加载。

reloadReady := &closeOnce{

C: make(chan struct{}),

}

reloadReady.Close = func() {

reloadReady.once.Do(func() {

close(reloadReady.C)

})

}

// 启动 jaeger 链路追踪

closer, err := initTracing(logger)

if err != nil {

level.Error(logger).Log("msg", "Unable to init tracing", "err", err)

os.Exit(2)

}

defer closer.Close()

// 启动 web 服务

listener, err := webHandler.Listener()

if err != nil {

level.Error(logger).Log("msg", "Unable to start web listener", "err", err)

os.Exit(1)

}

// 验证额外的 web 配置,比如 tsl

err = toolkit_web.Validate(*httpsConfig)

if err != nil {

level.Error(logger).Log("msg", "Unable to validate web configuration file", "err", err)

os.Exit(1)

}

// oklog.run.Group 和 google errgroup 功能相近,维护一组并发任务的执行。

var g run.Group

{

// Termination handler.

term := make(chan os.Signal, 1)

signal.Notify(term, os.Interrupt, syscall.SIGTERM)

cancel := make(chan struct{})

g.Add(

// 接收信号退出

func() error {

// Don't forget to release the reloadReady channel so that waiting blocks can exit normally.

select {

case <-term:

level.Warn(logger).Log("msg", "Received SIGTERM, exiting gracefully...")

reloadReady.Close()

case <-webHandler.Quit():

level.Warn(logger).Log("msg", "Received termination request via web service, exiting gracefully...")

case <-cancel:

reloadReady.Close()

}

return nil

},

func(err error) {

close(cancel)

},

)

}

{

// Scrape discovery manager.

g.Add(

func() error {

err := discoveryManagerScrape.Run()

level.Info(logger).Log("msg", "Scrape discovery manager stopped")

return err

},

func(err error) {

level.Info(logger).Log("msg", "Stopping scrape discovery manager...")

cancelScrape()

},

)

}

{

// Notify discovery manager.

g.Add(

func() error {

err := discoveryManagerNotify.Run()

level.Info(logger).Log("msg", "Notify discovery manager stopped")

return err

},

func(err error) {

level.Info(logger).Log("msg", "Stopping notify discovery manager...")

cancelNotify()

},

)

}

{

// Scrape manager.

g.Add(

func() error {

// When the scrape manager receives a new targets list

// it needs to read a valid config for each job.

// It depends on the config being in sync with the discovery manager so

// we wait until the config is fully loaded.

// scrape manager 获取到新的抓取目标列表时,它需要读取每个 job 的合法的配置。

// 这依赖于正在被 discovery manager 同步的配置文件,所以要等到配置加载完成。

<-reloadReady.C

err := scrapeManager.Run(discoveryManagerScrape.SyncCh())

level.Info(logger).Log("msg", "Scrape manager stopped")

return err

},

func(err error) {

// Scrape manager needs to be stopped before closing the local TSDB

// so that it doesn't try to write samples to a closed storage.

level.Info(logger).Log("msg", "Stopping scrape manager...")

scrapeManager.Stop()

},

)

}

{

// Reload handler.

// Make sure that sighup handler is registered with a redirect to the channel before the potentially

// long and synchronous tsdb init.

// tsdb 初始化时间可能很长,确保 sighup 处理函数在这之前注册完成。

hup := make(chan os.Signal, 1)

signal.Notify(hup, syscall.SIGHUP)

cancel := make(chan struct{})

g.Add(

func() error {

<-reloadReady.C

for {

select {

case <-hup:

if err := reloadConfig(cfg.configFile, logger, noStepSubqueryInterval, reloaders...); err != nil {

level.Error(logger).Log("msg", "Error reloading config", "err", err)

}

case rc := <-webHandler.Reload():

if err := reloadConfig(cfg.configFile, logger, noStepSubqueryInterval, reloaders...); err != nil {

level.Error(logger).Log("msg", "Error reloading config", "err", err)

rc <- err

} else {

rc <- nil

}

case <-cancel:

return nil

}

}

},

func(err error) {

// Wait for any in-progress reloads to complete to avoid

// reloading things after they have been shutdown.

cancel <- struct{}{}

},

)

}

{

// Initial configuration loading.

cancel := make(chan struct{})

g.Add(

func() error {

select {

case <-dbOpen:

// In case a shutdown is initiated before the dbOpen is released

case <-cancel:

reloadReady.Close()

return nil

}

if err := reloadConfig(cfg.configFile, logger, noStepSubqueryInterval, reloaders...); err != nil {

return errors.Wrapf(err, "error loading config from %q", cfg.configFile)

}

reloadReady.Close()

webHandler.Ready()

level.Info(logger).Log("msg", "Server is ready to receive web requests.")

<-cancel

return nil

},

func(err error) {

close(cancel)

},

)

}

{

// Rule manager.

g.Add(

func() error {

<-reloadReady.C

ruleManager.Run()

return nil

},

func(err error) {

ruleManager.Stop()

},

)

}

{

// TSDB.

opts := cfg.tsdb.ToTSDBOptions()

cancel := make(chan struct{})

g.Add(

func() error {

level.Info(logger).Log("msg", "Starting TSDB ...")

if cfg.tsdb.WALSegmentSize != 0 {

if cfg.tsdb.WALSegmentSize < 10*1024*1024 || cfg.tsdb.WALSegmentSize > 256*1024*1024 {

return errors.New("flag 'storage.tsdb.wal-segment-size' must be set between 10MB and 256MB")

}

}

db, err := openDBWithMetrics(

cfg.localStoragePath,

logger,

prometheus.DefaultRegisterer,

&opts,

)

if err != nil {

return errors.Wrapf(err, "opening storage failed")

}

switch fsType := prom_runtime.Statfs(cfg.localStoragePath); fsType {

case "NFS_SUPER_MAGIC":

level.Warn(logger).Log("fs_type", fsType, "msg", "This filesystem is not supported and may lead to data corruption and data loss. Please carefully read https://prometheus.io/docs/prometheus/latest/storage/ to learn more about supported filesystems.")

default:

level.Info(logger).Log("fs_type", fsType)

}

level.Info(logger).Log("msg", "TSDB started")

level.Debug(logger).Log("msg", "TSDB options",

"MinBlockDuration", cfg.tsdb.MinBlockDuration,

"MaxBlockDuration", cfg.tsdb.MaxBlockDuration,

"MaxBytes", cfg.tsdb.MaxBytes,

"NoLockfile", cfg.tsdb.NoLockfile,

"RetentionDuration", cfg.tsdb.RetentionDuration,

"WALSegmentSize", cfg.tsdb.WALSegmentSize,

"AllowOverlappingBlocks", cfg.tsdb.AllowOverlappingBlocks,

"WALCompression", cfg.tsdb.WALCompression,

)

startTimeMargin := int64(2 * time.Duration(cfg.tsdb.MinBlockDuration).Seconds() * 1000)

localStorage.Set(db, startTimeMargin)

// 完成 DB 启动

close(dbOpen)

<-cancel

return nil

},

func(err error) {

if err := fanoutStorage.Close(); err != nil {

level.Error(logger).Log("msg", "Error stopping storage", "err", err)

}

close(cancel)

},

)

}

{

// Web handler.

g.Add(

func() error {

if err := webHandler.Run(ctxWeb, listener, *httpsConfig); err != nil {

return errors.Wrapf(err, "error starting web server")

}

return nil

},

func(err error) {

cancelWeb()

},

)

}

{

// Notifier.

// Calling notifier.Stop() before ruleManager.Stop() will cause a panic if the ruleManager isn't running,

// so keep this interrupt after the ruleManager.Stop().

// 如果 ruleManager不在运行,在调用 ruleManager.Stop() 之前调用 notifier.Stop() 会引发 pannic,

// 所以确保在 ruleManager.Stop() 中断 notifier。

g.Add(

func() error {

// When the notifier manager receives a new targets list

// it needs to read a valid config for each job.

// It depends on the config being in sync with the discovery manager

// so we wait until the config is fully loaded.

<-reloadReady.C

notifierManager.Run(discoveryManagerNotify.SyncCh())

level.Info(logger).Log("msg", "Notifier manager stopped")

return nil

},

func(err error) {

notifierManager.Stop()

},

)

}

if err := g.Run(); err != nil {

level.Error(logger).Log("err", err)

os.Exit(1)

}

level.Info(logger).Log("msg", "See you next time!")

}

// 初始化

func openDBWithMetrics(dir string, logger log.Logger, reg prometheus.Registerer, opts *tsdb.Options) (*tsdb.DB, error) {

db, err := tsdb.Open(

dir,

log.With(logger, "component", "tsdb"),

reg,

opts,

)

if err != nil {

return nil, err

}

reg.MustRegister(

prometheus.NewGaugeFunc(prometheus.GaugeOpts{

Name: "prometheus_tsdb_lowest_timestamp_seconds",

Help: "Lowest timestamp value stored in the database.",

}, func() float64 {

bb := db.Blocks()

if len(bb) == 0 {

return float64(db.Head().MinTime() / 1000)

}

return float64(db.Blocks()[0].Meta().MinTime / 1000)

}), prometheus.NewGaugeFunc(prometheus.GaugeOpts{

Name: "prometheus_tsdb_head_min_time_seconds",

Help: "Minimum time bound of the head block.",

}, func() float64 { return float64(db.Head().MinTime() / 1000) }),

prometheus.NewGaugeFunc(prometheus.GaugeOpts{

Name: "prometheus_tsdb_head_max_time_seconds",

Help: "Maximum timestamp of the head block.",

}, func() float64 { return float64(db.Head().MaxTime() / 1000) }),

)

return db, nil

}

type safePromQLNoStepSubqueryInterval struct {

value atomic.Int64

}

func durationToInt64Millis(d time.Duration) int64 {

return int64(d / time.Millisecond)

}

func (i *safePromQLNoStepSubqueryInterval) Set(ev model.Duration) {

i.value.Store(durationToInt64Millis(time.Duration(ev)))

}

func (i *safePromQLNoStepSubqueryInterval) Get(int64) int64 {

return i.value.Load()

}

type reloader struct {

name string

reloader func(*config.Config) error

}

// 重加载配置

func reloadConfig(filename string, logger log.Logger, noStepSuqueryInterval *safePromQLNoStepSubqueryInterval, rls ...reloader) (err error) {

start := time.Now()

timings := []interface{}{}

level.Info(logger).Log("msg", "Loading configuration file", "filename", filename)

// 退出时根据 err 是否为 nil 来设置 configSuccess 指标

defer func() {

if err == nil {

configSuccess.Set(1)

configSuccessTime.SetToCurrentTime()

} else {

configSuccess.Set(0)

}

}()

conf, err := config.LoadFile(filename)

if err != nil {

return errors.Wrapf(err, "couldn't load configuration (--config.file=%q)", filename)

}

// reloader 可以是 rule reloader、sd reloader等

failed := false

for _, rl := range rls {

rstart := time.Now()

// reloader 方法是各个 reloader 的 ApplyConfig 方法

if err := rl.reloader(conf); err != nil {

level.Error(logger).Log("msg", "Failed to apply configuration", "err", err)

failed = true

}

timings = append(timings, rl.name, time.Since(rstart))

}

if failed {

return errors.Errorf("one or more errors occurred while applying the new configuration (--config.file=%q)", filename)

}

// noStepSuqueryInterval 设置为全局估值时间间隔

noStepSuqueryInterval.Set(conf.GlobalConfig.EvaluationInterval)

l := []interface{}{"msg", "Completed loading of configuration file", "filename", filename, "totalDuration", time.Since(start)}

level.Info(logger).Log(append(l, timings...)...)

return nil

}

func startsOrEndsWithQuote(s string) bool {

return strings.HasPrefix(s, "\"") || strings.HasPrefix(s, "'") ||

strings.HasSuffix(s, "\"") || strings.HasSuffix(s, "'")

}

// compileCORSRegexString compiles given string and adds anchors

func compileCORSRegexString(s string) (*regexp.Regexp, error) {

r, err := relabel.NewRegexp(s)

if err != nil {

return nil, err

}

return r.Regexp, nil

}

// computeExternalURL computes a sanitized external URL from a raw input. It infers unset

// URL parts from the OS and the given listen address.

func computeExternalURL(u, listenAddr string) (*url.URL, error) {

if u == "" {

hostname, err := os.Hostname()

if err != nil {

return nil, err

}

_, port, err := net.SplitHostPort(listenAddr)

if err != nil {

return nil, err

}

u = fmt.Sprintf("http://%s:%s/", hostname, port)

}

if startsOrEndsWithQuote(u) {

return nil, errors.New("URL must not begin or end with quotes")

}

eu, err := url.Parse(u)

if err != nil {

return nil, err

}

ppref := strings.TrimRight(eu.Path, "/")

if ppref != "" && !strings.HasPrefix(ppref, "/") {

ppref = "/" + ppref

}

eu.Path = ppref

return eu, nil

}

type sender interface {

Send(alerts ...*notifier.Alert)

}

// sendAlerts implements the rules.NotifyFunc for a Notifier.

func sendAlerts(s sender, externalURL string) rules.NotifyFunc {

return func(ctx context.Context, expr string, alerts ...*rules.Alert) {

var res []*notifier.Alert

for _, alert := range alerts {

a := ¬ifier.Alert{

StartsAt: alert.FiredAt,

Labels: alert.Labels,

Annotations: alert.Annotations,

GeneratorURL: externalURL + strutil.TableLinkForExpression(expr),

}

if !alert.ResolvedAt.IsZero() {

a.EndsAt = alert.ResolvedAt

} else {

a.EndsAt = alert.ValidUntil

}

res = append(res, a)

}

if len(alerts) > 0 {

s.Send(res...)

}

}

}

// readyStorage implements the Storage interface while allowing to set the actual

// storage at a later point in time.

// 实现 Storage 接口,允许在稍后的时间点设置实际的存储

type readyStorage struct {

mtx sync.RWMutex

db *tsdb.DB

startTimeMargin int64

}

// Set the storage.

func (s *readyStorage) Set(db *tsdb.DB, startTimeMargin int64) {

s.mtx.Lock()

defer s.mtx.Unlock()

s.db = db

s.startTimeMargin = startTimeMargin

}

// get is internal, you should use readyStorage as the front implementation layer.

func (s *readyStorage) get() *tsdb.DB {

s.mtx.RLock()

x := s.db

s.mtx.RUnlock()

return x

}

// StartTime implements the Storage interface.

func (s *readyStorage) StartTime() (int64, error) {

if x := s.get(); x != nil {

var startTime int64

if len(x.Blocks()) > 0 {

startTime = x.Blocks()[0].Meta().MinTime

} else {

startTime = time.Now().Unix() * 1000

}

// Add a safety margin as it may take a few minutes for everything to spin up.

return startTime + s.startTimeMargin, nil

}

return math.MaxInt64, tsdb.ErrNotReady

}

// Querier implements the Storage interface.

func (s *readyStorage) Querier(ctx context.Context, mint, maxt int64) (storage.Querier, error) {

if x := s.get(); x != nil {

return x.Querier(ctx, mint, maxt)

}

return nil, tsdb.ErrNotReady

}

// ChunkQuerier implements the Storage interface.

func (s *readyStorage) ChunkQuerier(ctx context.Context, mint, maxt int64) (storage.ChunkQuerier, error) {

if x := s.get(); x != nil {

return x.ChunkQuerier(ctx, mint, maxt)

}

return nil, tsdb.ErrNotReady

}

// Appender implements the Storage interface.

func (s *readyStorage) Appender(ctx context.Context) storage.Appender {

if x := s.get(); x != nil {

return x.Appender(ctx)

}

return notReadyAppender{}

}

type notReadyAppender struct{}

func (n notReadyAppender) Add(l labels.Labels, t int64, v float64) (uint64, error) {

return 0, tsdb.ErrNotReady

}

func (n notReadyAppender) AddFast(ref uint64, t int64, v float64) error { return tsdb.ErrNotReady }

func (n notReadyAppender) Commit() error { return tsdb.ErrNotReady }

func (n notReadyAppender) Rollback() error { return tsdb.ErrNotReady }

// Close implements the Storage interface.

func (s *readyStorage) Close() error {

if x := s.get(); x != nil {

return x.Close()

}

return nil

}

// CleanTombstones implements the api_v1.TSDBAdminStats and api_v2.TSDBAdmin interfaces.

func (s *readyStorage) CleanTombstones() error {

if x := s.get(); x != nil {

return x.CleanTombstones()

}

return tsdb.ErrNotReady

}

// Delete implements the api_v1.TSDBAdminStats and api_v2.TSDBAdmin interfaces.

func (s *readyStorage) Delete(mint, maxt int64, ms ...*labels.Matcher) error {

if x := s.get(); x != nil {

return x.Delete(mint, maxt, ms...)

}

return tsdb.ErrNotReady

}

// Snapshot implements the api_v1.TSDBAdminStats and api_v2.TSDBAdmin interfaces.

func (s *readyStorage) Snapshot(dir string, withHead bool) error {

if x := s.get(); x != nil {

return x.Snapshot(dir, withHead)

}

return tsdb.ErrNotReady

}

// Stats implements the api_v1.TSDBAdminStats interface.

func (s *readyStorage) Stats(statsByLabelName string) (*tsdb.Stats, error) {

if x := s.get(); x != nil {

return x.Head().Stats(statsByLabelName), nil

}

return nil, tsdb.ErrNotReady

}

// ErrNotReady is returned if the underlying scrape manager is not ready yet.

var ErrNotReady = errors.New("Scrape manager not ready")

// ReadyScrapeManager allows a scrape manager to be retrieved. Even if it's set at a later point in time.

// 获取 scrape manager,即使这个 manager 是在稍后时间点配置好的。

type readyScrapeManager struct {

mtx sync.RWMutex

m *scrape.Manager

}

// Set the scrape manager.

func (rm *readyScrapeManager) Set(m *scrape.Manager) {

rm.mtx.Lock()

defer rm.mtx.Unlock()

rm.m = m

}

// Get the scrape manager. If is not ready, return an error.

func (rm *readyScrapeManager) Get() (*scrape.Manager, error) {

rm.mtx.RLock()

defer rm.mtx.RUnlock()

if rm.m != nil {

return rm.m, nil

}

return nil, ErrNotReady

}

// tsdbOptions is tsdb.Option version with defined units.

// This is required as tsdb.Option fields are unit agnostic (time).

type tsdbOptions struct {

WALSegmentSize units.Base2Bytes

RetentionDuration model.Duration

MaxBytes units.Base2Bytes

NoLockfile bool

AllowOverlappingBlocks bool

WALCompression bool

StripeSize int

MinBlockDuration model.Duration

MaxBlockDuration model.Duration

}

func (opts tsdbOptions) ToTSDBOptions() tsdb.Options {

return tsdb.Options{

WALSegmentSize: int(opts.WALSegmentSize),

RetentionDuration: int64(time.Duration(opts.RetentionDuration) / time.Millisecond),

MaxBytes: int64(opts.MaxBytes),

NoLockfile: opts.NoLockfile,

AllowOverlappingBlocks: opts.AllowOverlappingBlocks,

WALCompression: opts.WALCompression,

StripeSize: opts.StripeSize,

MinBlockDuration: int64(time.Duration(opts.MinBlockDuration) / time.Millisecond),

MaxBlockDuration: int64(time.Duration(opts.MaxBlockDuration) / time.Millisecond),

}

}

// jaeger 链路追踪用

func initTracing(logger log.Logger) (io.Closer, error) {

// Set tracing configuration defaults.

cfg := &jcfg.Configuration{

ServiceName: "prometheus",

Disabled: true,

}

// Available options can be seen here:

// https://github.com/jaegertracing/jaeger-client-go#environment-variables

cfg, err := cfg.FromEnv()

if err != nil {

return nil, errors.Wrap(err, "unable to get tracing config from environment")

}

jLogger := jaegerLogger{logger: log.With(logger, "component", "tracing")}

tracer, closer, err := cfg.NewTracer(

jcfg.Logger(jLogger),

jcfg.Metrics(jprom.New()),

)

if err != nil {

return nil, errors.Wrap(err, "unable to init tracing")

}

opentracing.SetGlobalTracer(tracer)

return closer, nil

}

type jaegerLogger struct {

logger log.Logger

}

func (l jaegerLogger) Error(msg string) {

level.Error(l.logger).Log("msg", msg)

}

func (l jaegerLogger) Infof(msg string, args ...interface{}) {

keyvals := []interface{}{"msg", fmt.Sprintf(msg, args...)}

level.Info(l.logger).Log(keyvals...)

}